AI governance has crossed a structural threshold.

What was once an ethics discussion has become an operational, legal, and board-level responsibility. Global data shows a consistent pattern: regulation is accelerating, liability is personalizing, costs are rising, and governance increasingly fails not because of missing frameworks—but because no one can credibly carry responsibility when decisions escalate.

This article consolidates global evidence and explains why AIGN Circle exists as a structural response to this new reality.

1. AI Governance Is Now Board-Level and Personal

Across global markets, AI governance has moved decisively into the boardroom:

- 41% of Fortune-500 companies have established dedicated AI Board Committees

- A new executive role, Chief AI Governance Officer, is emerging with compensation levels of USD 280k–450k

- The AI liability insurance market grew from USD 400M to USD 2.1B in 2025, reflecting real legal exposure

- Institutional investors increasingly demand AI governance disclosures in proxy statements

Conclusion:

AI governance is no longer delegable. Accountability is assigned to identifiable individuals at the highest organizational level.

2. Regulation Is Global, Fragmented, and Enforced

Global trends confirm that AI governance is not converging—it is fragmenting:

- By 2026, ~50% of countries are expected to enforce binding AI regulation

- By 2027, ~35% of jurisdictions will impose region-specific AI governance requirements

- Single AI systems increasingly fall under multiple regulatory regimes simultaneously

- Courts are defining AI governance obligations faster than regulators, including strict-liability precedents

Conclusion:

Governance decisions are now contextual, jurisdiction-specific, and legally reviewable—they cannot be standardized away.

3. Governance Has Become Expensive and Operationally Heavy

Organizations are discovering the true cost of “doing AI governance”:

- AI governance consumes 5–15% of total AI investment

- Large enterprises require 10–50 FTEs for governance alone

- Annual governance costs include:

- Governance platforms: USD 50k–500k

- Monitoring & auditing tools: USD 100k–1M

- Legal counsel: USD 100k–500k

- Third-party audits: USD 50k–300k per system

Despite these investments, the report explicitly identifies “governance theater”:

policies, committees, and tools without real decision authority.

Conclusion:

Governance has become operational—but not necessarily effective.

4. The Talent and Responsibility Gap

Global data shows a structural mismatch:

- Governance professionals are scarce, highly paid, and over-rotated

- Average tenure for AI governance roles is ~18 months

- Small and mid-size organizations struggle most:

91% report inability to continuously oversee AI systems

At the same time, governance success increasingly depends on:

- judgment under uncertainty

- escalation authority

- credibility with regulators, boards, and courts

Conclusion:

This is not a skill gap.

It is a responsibility gap.

5. What Actually Works (and Why It Is Human)

The report identifies practices that work:

- Board-level AI risk reviews

- Red-team exercises simulating AI failure

- External advisory boards

- Governance dashboards tied to incidents and audits

All of these share one property:

they require experienced humans with mandate and accountability.

Tools support governance.

Frameworks describe governance.

People carry governance.

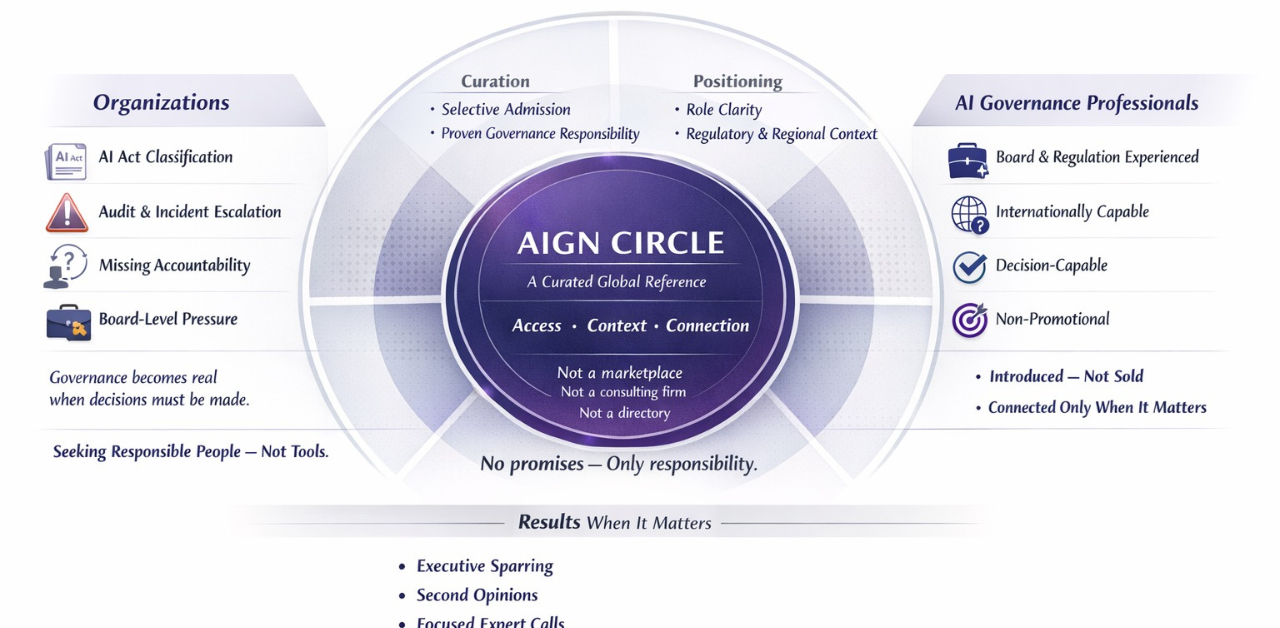

6. Why AIGN Circle Exists

From the data, one conclusion is unavoidable:

AI governance fails at the point of escalation—

when responsibility must be assumed, defended, and carried.

AIGN Circle exists because:

- Accountability cannot be automated

- Responsibility cannot be distributed indefinitely

- Trust cannot be scaled through tools alone

AIGN Circle is a curated global responsibility layer:

providing organizations access to vetted individuals who can credibly assume responsibility when AI governance escalates to boards, regulators, courts, or public scrutiny.

It is not a talent marketplace.

It is not a consulting pool.

It is a reference layer for responsibility.

7. The Structural Distinction

- Landscapes explain what is happening

- Operating systems make governance executable

- Responsibility frameworks determine who can stand behind decisions

AIGN Circle addresses the third—and most fragile—layer.

Final Conclusion

Global regulation, market data, and legal developments all point in the same direction:

AI governance has become operational, enforceable, and personal.

Organizations that fail will not fail because they lacked principles.

They will fail because no one could credibly take responsibility when it mattered.

That is why AIGN Circle exists.