AI governance is discussed everywhere.

But very rarely is one question answered clearly: When does it actually exist?

Across frameworks, principles, maturity models, and toolkits, one pattern keeps repeating:

AI governance is described extensively — but it is not activated when it matters most.

Through extensive analysis of regulatory practice, board-level escalation cases, and real-world AI incidents, one conclusion becomes unavoidable:

AI governance does not fail because rules are missing.

It fails because responsibility and liability do not become effective inside systems at the moment decisions matter.

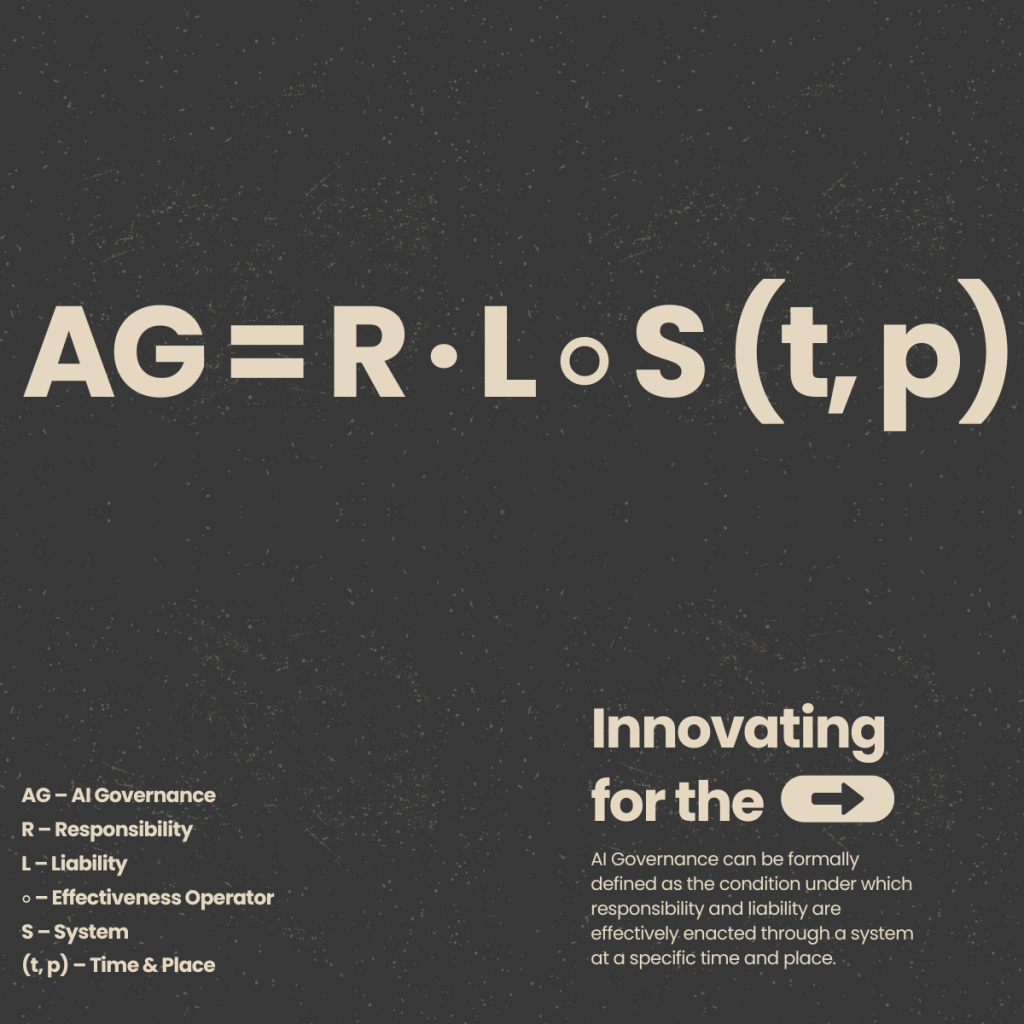

This insight can be expressed in a single condition.

The Existence Condition of AI Governance

AG = R · L ∘ S (t, p)

This is not a framework.

This is not a maturity model.

This is an existence condition.

If any element is missing, AI governance does not partially fail —

it collapses entirely.

Why Each Element Is Non-Negotiable

R — Responsibility

Without a clearly identifiable decision-holder, governance does not exist.

What remains is administration, coordination, or documentation — but no governance.

AI systems cannot be governed without human agency that can:

- decide,

- justify,

- and stand behind outcomes under pressure.

Anonymous accountability is not accountability.

L — Liability

Responsibility without consequence is normative, not operative.

Governance becomes real only when decisions carry:

- legal,

- regulatory,

- financial,

- or professional consequences.

If no one bears enforceable liability, governance may look mature on paper —

but it is inert in reality.

S — System

Governance does not operate in abstraction.

Without a system that:

- enforces controls,

- records decisions,

- preserves evidence,

- and creates auditability,

governance remains symbolic.

Policies do not govern systems.

Systems operationalize governance.

(t, p) — Time & Place

Governance is not static.

Every real governance decision happens:

- at a specific moment,

- in a concrete context,

- under situational constraints.

Without temporal and contextual attribution:

- responsibility cannot be assigned,

- liability cannot be enforced,

- and decisions cannot be reconstructed.

∘ — Effectiveness Through the System

Responsibility and liability do not exist alongside systems.

They exist only through them.

If responsibility and liability are not embedded into operational systems,

they do not scale, persist, or survive escalation.

Why This Changes the Governance Debate

Most AI governance discussions focus on:

- principles,

- ethics,

- maturity levels,

- documentation libraries,

- or compliance checklists.

Those elements are not wrong — but they are insufficient.

The decisive question is not:

How mature is your AI governance?

It is:

If this escalates right now — does your AI governance actually exist?

Can you point to:

- the person who decided,

- the liability that applied,

- the system that recorded it,

- and the exact moment it happened?

If not, governance disappears precisely when it is needed most.

From Symbolic Governance to Operational Reality

The formula AG = R · L ∘ S (t, p) draws a hard boundary:

- between governance that looks credible,

- and governance that survives audits, incidents, and courts.

It shifts AI governance from:

- aspiration to activation,

- documentation to decision,

- intent to enforceability.

This is the threshold where governance becomes real.

The Hard Test

Ask a simple question inside your organization:

If a high-risk AI decision escalates right now —

does our AI governance exist, or does it dissolve into uncertainty?

The answer determines whether governance is operational —

or merely declared.

AI governance is not missing ideas.

It is missing existence conditions.

At AIGN Global, we focus exclusively on making AI governance real —

where responsibility, liability, systems, time, and place converge into enforceable decisions.

Because governance that does not exist at the decisive moment

is not governance at all.