From Regulation to Real Governance

Responsible AI doesn’t start with tools – it starts with structure.

Most AI governance programs fail because they remain abstract:

checklists without accountability, policies without enforcement, and compliance without trust.

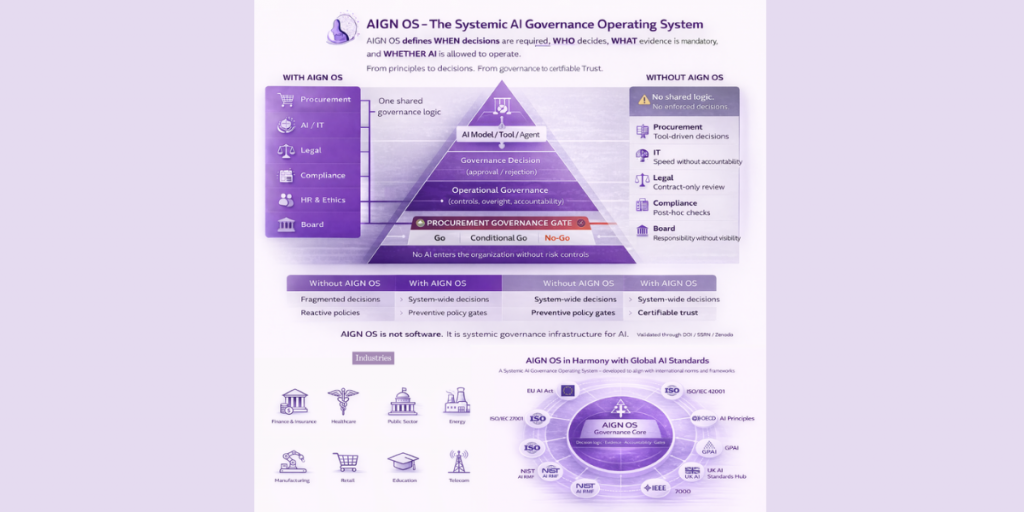

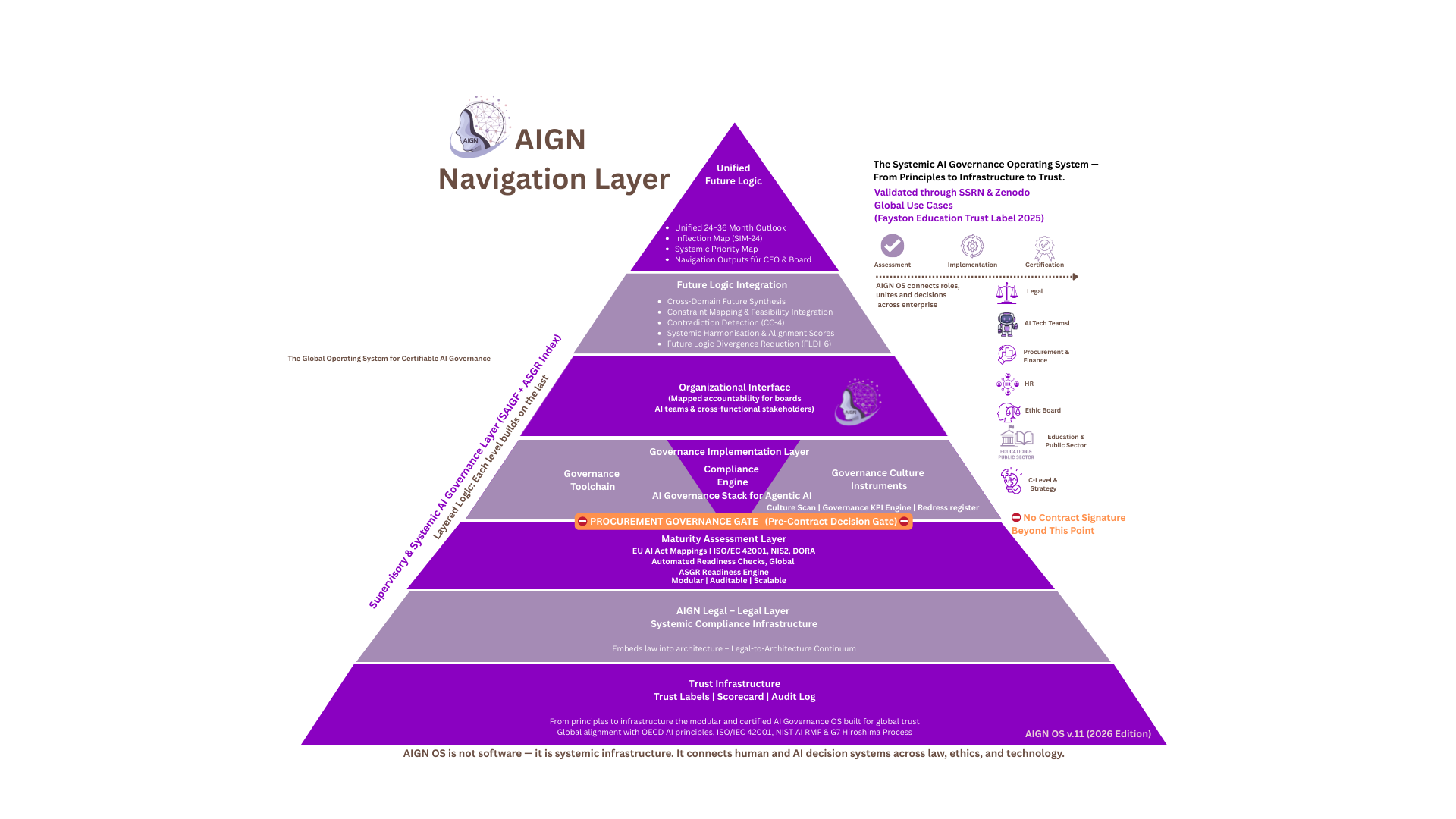

AIGN OS changes that.

It is the world’s first operational, certifiable governance operating system for AI and data — embedding global principles, laws, and standards into verifiable organizational practice.

On this page, you’ll see how organizations use AIGN OS to:

- assess readiness and governance maturity,

- assign roles and responsibilities,

- embed systemic AI governance,

- and achieve verifiable trust through certification.

Whether you’re a public authority, enterprise, school, or startup — this is how responsible AI becomes operational.

The 4-Step Journey to Trusted AI Governance

The 4-Step Journey to Trusted AI Governance

| Step | What Happens | What You Get | Outcome |

|---|---|---|---|

| 1. Activate AIGN OS | License the system and receive the full governance suite. | Frameworks, toolkits, capability models, trust label pathways, and optional onboarding. | Start your governance architecture – operational and certifiable. |

| 2. Assess Your Readiness | Conduct the AIGN OS Readiness Check (DOI-registered and IP-protected). | Score (0–100), heatmap, quick wins, and readiness report in 48h. | Establish your governance baseline across all regimes (EU AI Act, ISO 42001, NIS2, DORA). |

| 3. Build & Operate Governance | Implement the AIGN OS 8-Layer Architecture. | Governance roles, RACI matrix, DPIA+, risk-class mapping, playbooks. | Full operational governance – traceable, auditable, scalable. |

| 4. Certify & Communicate Trust | Complete the AIGN Trust Path. | Trust Label, Agentic AI Verification, or SAIGF Maturity Certificate. | Publicly verifiable recognition for responsible AI governance. |

Use Case Highlights

| Sector | Example Use Case |

|---|---|

| Public Administration | Municipality applies AIGN OS to prepare algorithmic systems for EU AI Act compliance and national oversight. |

| Education | National ministry uses the Education Framework to govern classroom AI tools and earn the Education Trust Label. |

| Finance & Banking | Institution implements the Agentic AI Framework for audit-proof risk transparency and DORA readiness. |

| Healthcare | Hospital aligns with ISO/IEC 42001 using AIGN’s maturity model and Trust Infrastructure. |

| Startups / SMEs | AI startup deploys the SME Framework to secure funding and demonstrate responsible innovation. |

Without AIGN OS vs. With AIGN OS

| Without AIGN OS | With AIGN OS |

|---|---|

| Siloed policies & disconnected audits | One integrated, certifiable governance infrastructure |

| Unclear roles in AI risk handling | Role-based architecture with RACI clarity |

| Regulatory panic & reactive responses | Maturity-driven, auditable, proactive governance |

| Trust gaps with partners & citizens | Verifiable trust labels and certification evidence |

| Fragmented tools & unclear processes | Unified governance logic mapped to global standards |

Results You Can Expect

Results You Can Expect

- Clear governance structure and accountability

- Readiness for EU AI Act, ISO/IEC 42001, Data Act

- Recognised trust signals and measurable assurance

- Seamless integration with ESG and procurement frameworks

- Reduced compliance costs, stronger stakeholder trust

Typical AIGN OS Deployment Flow

| Phase | Focus Area | Tools / Outputs |

|---|---|---|

| Orientation | Governance briefing & framework selection | AIGN Governance Briefing™ |

| Diagnosis | Readiness check & baseline mapping | AIGN OS Readiness Report, ASGR baseline |

| Operation | Implementation of the 8-Layer Architecture | Toolchain, Legal Layer, Role Models |

| Verification | Certification & communication | Trust Labels, SAIGF Certificates, Public Registry |

What Comes With Every License

| Included in AIGN OS | Details |

|---|---|

| Framework Access | Tailored to your license type (e.g., Global, Education, Agentic AI, Data, SME). |

| Self-Assessment Tools | Readiness Checks and ASGR diagnostics, browser-based, no setup. |

| Governance Toolkits | Templates, role models, matrices, compliance playbooks. |

| Trust Certification Logic | Built-in label pathways and verification support. |

| Continuous Updates | Regulation-aligned, internationally maintained. |

| Optional Onboarding | Team workshops, executive briefings, and local partner support. |

Why AIGN OS Works in Practice

| Systemic Component | Governance Function | Result |

|---|---|---|

| AIGN Legal Layer | Translates law into architecture | Continuous compliance evidence |

| ASGR Index | Benchmarks systemic readiness | Comparable maturity scores |

| Governance Toolchain | Standardizes roles and workflows | Operational consistency |

| SAIGF Oversight Layer | Embeds board-level accountability | Fiduciary assurance |

| Trust Infrastructure | Certifies visible proof of responsibility | Public and investor trust |

AIGN OS in One Sentence

AIGN OS turns your AI governance from a regulatory burden into a measurable, certifiable advantage.

Because in the age of intelligent systems, trust is not a statement — it’s a system.

Legal Notice on Intellectual Property

© 2025 Patrick Upmann · AIGN – Artificial Intelligence Governance Network

All terminology, structures, frameworks, and layered architecture of AIGN OS are protected under international copyright, trademark, and IP law.

Published as DOI-registered scientific work, establishing prior art, academic recognition, and enforceable authorship.

Unauthorized use or reproduction will result in legal enforcement under the Berne Convention & WIPO Treaty.